Emerging Trends in Intelligent Interface Design–What to Expect in 2019

Human Machine Interface (HMI) has not evolved to fit the real world of the 21st century. It’s made sense for decades, as technology waits for demand from a person before responding and then guides them to push buttons for activation. When this was first introduced in the early 1970s, it made sense.

Most interactions between workers and computers were at a desk with the person right before it. That’s not how the modern user interacts with technology.

HMI Must Evolve (With Help)

Technology now goes with us everywhere. It’s 100% portable, yet the HMI hasn’t changed. What’s happening now and what will happen in the next year is more of a focus on intelligent user interface design (IUI). Technology no longer needs to be in the “box.”

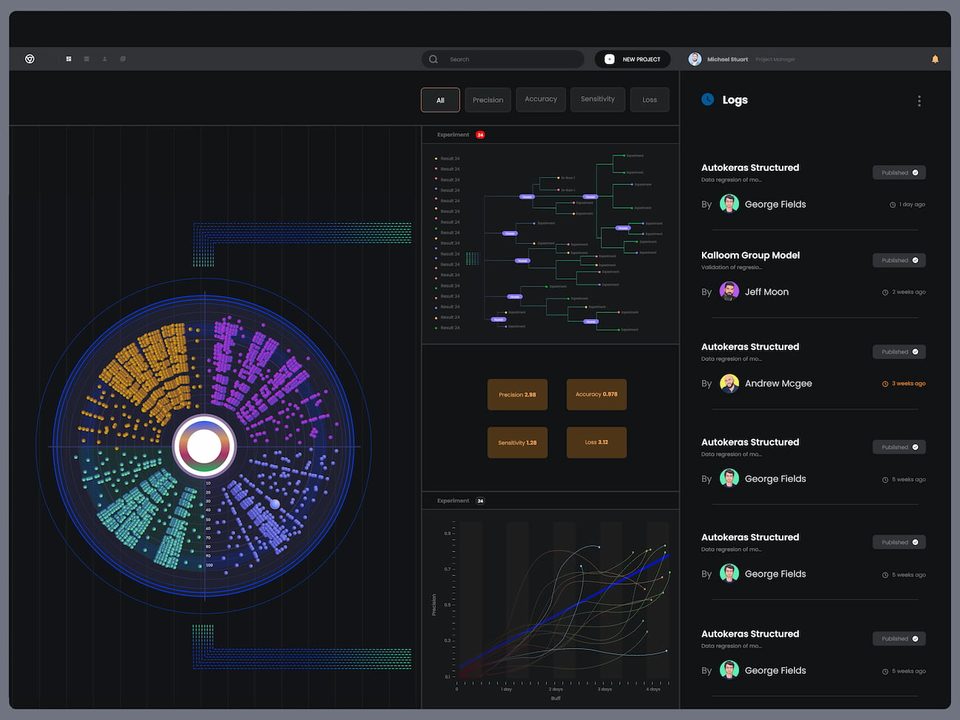

IUI allows for the collaboration of artificial intelligence (AI), machine learning, sensors, and robotics. These technologies work together to identify and navigate the scenario so that it can act for users.

IUI uses layered neural networks to “learn” from examples. Machine learning can then exceed human capabilities with a specific frame of reference. You can see the impact of this in how machines can recognize images much better than humans can.

The fact is, the need to interact with technology is now almost constant, particularly in the areas of smartphones and wearables. This is currently expanding to autonomous cars and interactions with smart cities. What IUI represents is an evolution of the user interface design, across all fields of tech.

AI Takes Interfaces Forward

With basic AI applications, such as chatbots, what are currently complex tasks will soon become simple commands. How will this look in the future? Consider how long it takes for IT staff to search a company’s servers for every document related to a certain account or topic.

This currently requires huge amounts of human time and interaction. Imagine instead simply asking the smart bot to do it for you. This saves time, which creates efficiencies, which in turn saves businesses real money. According to PwC, this could add up to $6.6 trillion in increased efficiency by 2030.

This example may make it seem like AI is just a glorified task taker, and many in the field look at AI as nothing more than an automated labor-saver. While it’s true that AI has a strong hold on automating processes, it deserves more credit than that. AI works, operating as an effective liaison between users and technology.

UI Trends—AI As Interface

In most cases, users only utilize a small fraction of a computer’s potential. Why? Because interaction between humans and computers is mostly analog. They have the potential, humans just need a better way to tap into it.

AI as a user interface delivers a tremendous amount of opportunity. This could eventually lead to the direct linking of the human brain and a computer. IUI is closer to achieving this than you might think.

Read also about the latest UI/UX design trends.

How much of your computer’s brain is it using?

Chatbots

The big question is, does it need to feel human? Does a customer expect service from a person? Or would they rather talk to a machine? With digital natives now dominating our population, their preferences are seriously influential, and they tend towards the machine over the human.

Consider this prediction from Gartner: by the year 2020, the average person will have more conversations with bots than with their husband or wife.

These conversations won’t happen with a screen either, as Accenture envisions that, within the next six years, most interfaces won’t have a screen. Rather the interactions will be integrated into daily tasks.

In effect, AI will become the digital face and voice of a brand.

Chatbots are extremely popular for customer service because they can answer most general questions without any human involvement. Their abilities will continue to evolve as will the design of the interface.

The big question is, does it need to feel human? Does a customer expect service from a person? Or would they rather talk to a machine? With digital natives now dominating our population, their preferences are seriously influential, and they tend towards the machine over the human.

Consider this prediction from Gartner: by the year 2020, the average person will have more conversations with bots than with their husband or wife.

These conversations won’t happen with a screen either, as Accenture envisions that, within the next six years, most interfaces won’t have a screen. Rather the interactions will be integrated into daily tasks.

In effect, AI will become the digital face and voice of a brand.

Sensor-Based Interactions

No need for voice or keyboards—sensor-based paradigms are emerging to blow these traditional tools out of the water. One example is Microsoft’s Kinect, which is helping develop natural user interfaces that use touchless hand gestures. Other highly advanced examples of sensor interactions include capturing ambient sounds from a wrist device that could then detect the type and amount of food consumed, helping anyone with dietary restrictions.

This trend is only beginning as now Google has joined the party, partnering with Levi’s for Project Jacquard, which will use sensors in jeans to alert wearers of weight gain.

The sensors will also allow users to make body gestures like swiping to interact with smartphones.

Pervasive Affective Computing

Affective computing is an important aspect of developing IUI, which would then have the ability to detect and respond to the affective needs of users. Think of how humans show emotion—through language, facial expressions, posture, or physiological responses such as elevated heart rate or dilated pupils. Up until recently, for a computer to understand these emotions has required extremely sophisticated systems.

However, affective computing is showing signs of adoption. It’s being used in learning capacities wherein the system perceives and reacts to a student’s mood, whether that be confusion, interest, or another psychological state.

Google Glass is the best example of this type of IUI. It has the current ability to determine the emotional and physiological state of users with great accuracy.

Learn more about the latest digital product design trends.

Challenges Ahead for IUI

Because IUI runs across many disciplines and has so many possible applications, experts from different fields have to work together to move IUI from where it is now to the what’s next stage. With any type of new technology, especially when machine learning is involved, there are starts and stops along the way. IUI is certainly not in a stop mode, but it’s not mainstream yet. The next year will bring to the table more challenges along with opportunities.

Read also about the intelligent interface for voice.

Browse more

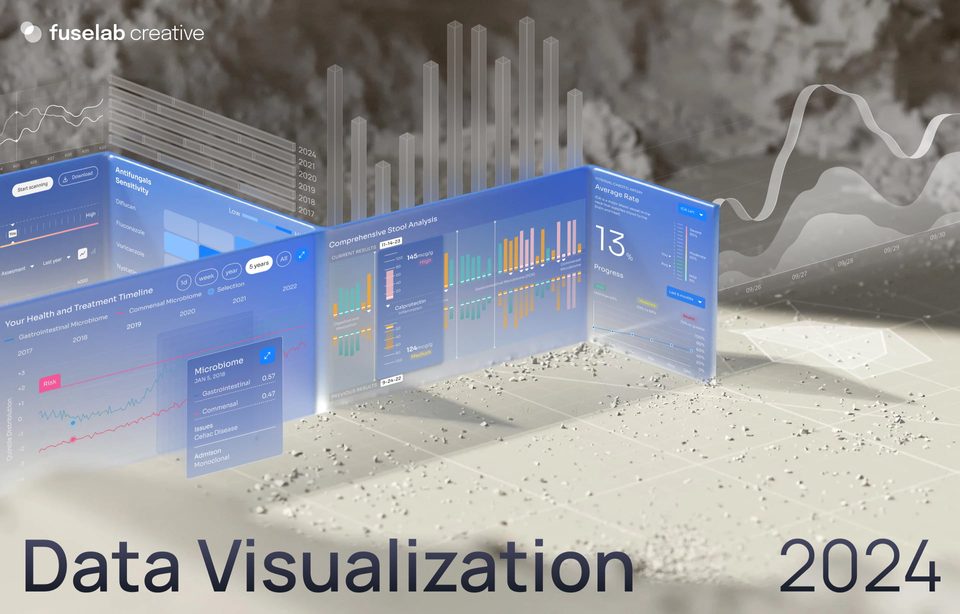

Data Visualization Trends 2024